Introduction

Cloudflare has become one of the most critical pieces of global internet infrastructure. As a CDN, DNS provider, security layer, and performance optimization service, it sits between users and millions of websites worldwide. When Cloudflare goes down, the internet feels it.

The incidents in November and December 2025 serve as stark reminders that even the most sophisticated infrastructure can fail, and when it does, the impact is global.

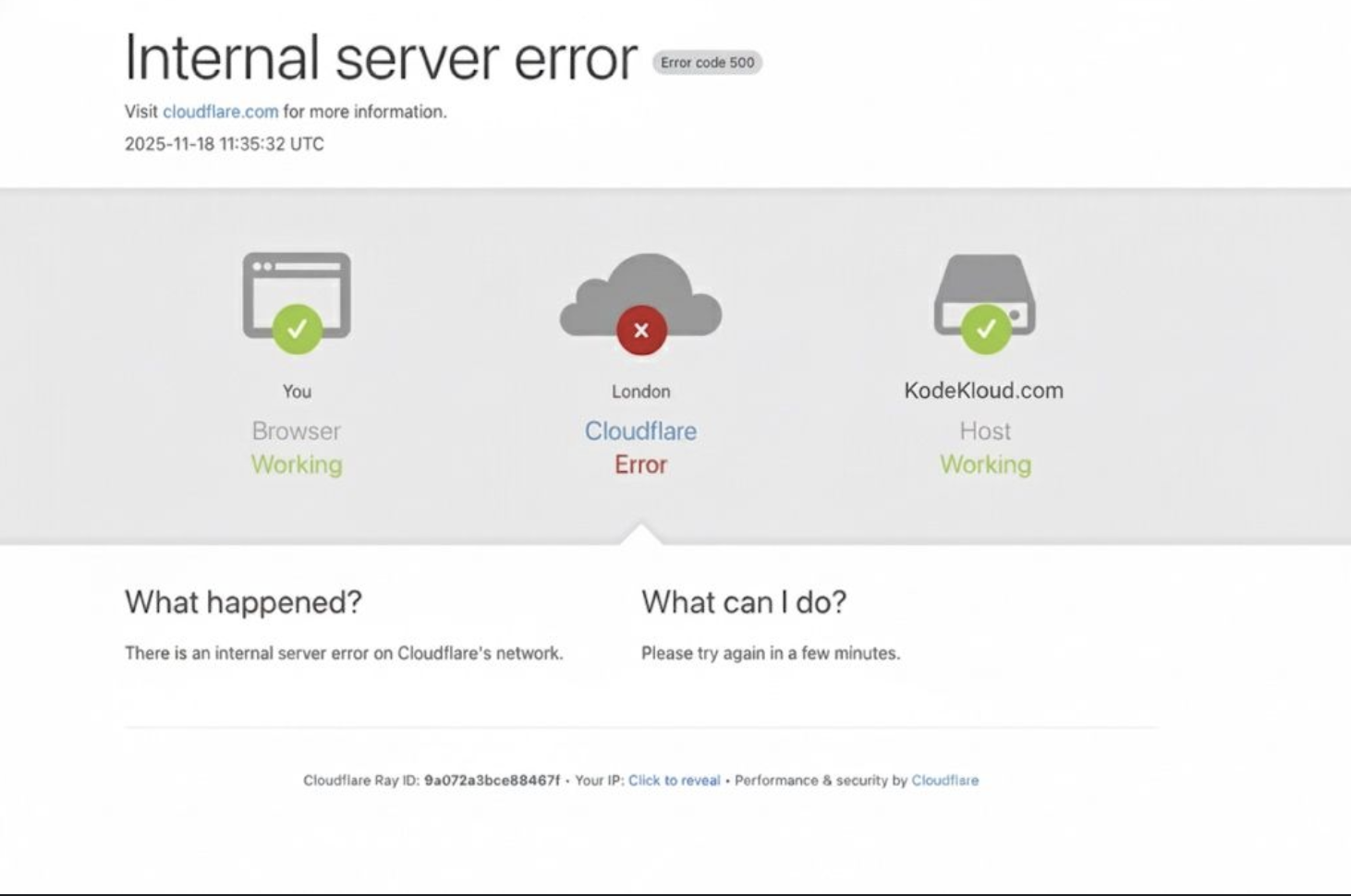

Incident 1: November 18, 2025

On this day, Cloudflare experienced a serious global failure that resulted in widespread “500 Internal Server Error” responses, bringing down many websites and services. Users reported being unable to access services like ChatGPT, X (formerly Twitter), and various applications and platforms.

What Happened

According to Cloudflare’s official analysis, the root cause was an error in generating a configuration file for “Bot Management”. The file grew too large, causing the system responsible for processing requests through Cloudflare’s network to crash.

The outage lasted several hours before the issue was resolved and services stabilized. In their postmortem analysis, Cloudflare acknowledged that a “bug in the Bot Management logic” caused the failure.

Impact

- Global service degradation affecting millions of websites

- Major platforms like ChatGPT and X became inaccessible

- Extended downtime lasting several hours

- Widespread user frustration and business impact

Incident 2: December 5, 2025

Less than three weeks after the November collapse, Cloudflare experienced another widespread service disruption. According to the official announcement, problems started around 08:47 UTC and were completely resolved around 09:12 UTC, approximately 25 minutes of total impact.

What Happened

The cause was identified: a change in the “body parsing logic” in the firewall, implemented as part of an attempt to mitigate a recently discovered vulnerability related to React Server Components. This change unexpectedly triggered an error in request processing.

Impact

- Slowed or disabled access to many websites and services

- Global services affected including Zoom, LinkedIn, and various platforms

- Even status tracking services (like Downdetector) became unavailable

- Cloudflare emphasized this was not a cyber attack. The failure was due to an error in internal configuration/firewall changes

Technical Analysis and Root Causes

From these incidents, we can extract the following technical reasons and mechanisms that failed to protect the global infrastructure:

November 18 Incident

The failure occurred in the system responsible for “Bot Management.” An error in an automated file (feature file) caused the system to be unable to process requests properly, leading to global service degradation.

Key Issues:

- Automated configuration generation failed

- File size exceeded system limits

- Insufficient validation before deployment

- Lack of circuit breakers to prevent cascading failures

December 5 Incident

A change in the firewall (body parsing logic), implemented to address a security vulnerability (related to React Server Components), led to unexpected errors. This highlights that even automated security measures can become the source of problems if they don’t have sufficient testing.

Key Issues:

- Security patch introduced breaking changes

- Insufficient testing of firewall logic changes

- No rollback mechanism for failed changes

- Production deployment without proper staging validation

Common Patterns

In both cases, the problem wasn’t external attacks, but rather:

- System complexity and interdependencies

- Configuration and automation dependencies

- Changes that were incorrectly or insufficiently tested before production

- Infrastructure sensitivity to human error or process failures

What This Means for Teams Relying on Cloudflare

Based on these incidents, the following implications are critical:

1. No 100% Uptime Guarantee

Even the largest and most established CDN, infrastructure, or edge provider can experience serious failures. There is no such thing as a guarantee of 100% availability.

2. Automation Can Fail

Automation, configuration changes, and security patches can cause problems just as easily as external attacks. Every change needs thorough testing and validation.

3. Multi-Vendor Strategy is Essential

If you have critical services (e-commerce, SaaS, high-traffic services), you must think about fallback plans, backup providers, and redundancy strategies.

4. Transparency and Monitoring

- Monitor provider status pages and postmortem reports

- Respond quickly to problems

- Prepare for incident response

- Have clear runbooks for when third-party services fail

Recommendations for Infrastructure Teams

1. Implement Multi-Vendor Strategies

Don’t put all your eggs in one basket. Consider using multiple CDN providers for critical services, with automatic failover capabilities.

2. Test All Changes Thoroughly

All infrastructure changes, especially at scale, need comprehensive testing in staging environments that mirror production as closely as possible.

3. Implement Circuit Breakers

Services should have automatic failover mechanisms to prevent cascading failures. When one service fails, others should be able to continue operating.

4. Monitor Dependency Health

Understanding your dependency chain helps identify potential failure points before they become critical. Set up monitoring for all third-party services you depend on.

5. Have Incident Response Plans

Prepare clear runbooks for when third-party services fail. Know who to contact, what to monitor, and how to communicate with users.

6. Balance Cost and Reliability

While vendor lock-in can reduce costs, it increases risk. Smart engineering teams balance cost optimization with reliability and redundancy.

Cost Implications

For businesses relying solely on Cloudflare:

- Lost Revenue: Downtime directly impacts revenue for e-commerce and SaaS platforms

- Reputation Damage: Extended outages can damage customer trust

- Emergency Costs: Teams often incur additional costs during incident response

- Opportunity Cost: Time spent on incident response instead of product development

Conclusion

The recent Cloudflare outages serve as a powerful reminder that no infrastructure is 100% reliable. Even the most sophisticated, globally distributed systems can fail due to configuration errors, automation bugs, or insufficient testing.

Smart engineering teams plan for failures, implement redundancy, and maintain flexibility in their vendor relationships. While cost optimization is important, reliability and redundancy should never be sacrificed for short-term savings.

The incidents of November and December 2025 show us that infrastructure failures are not just possible, they’re inevitable. The question isn’t whether your infrastructure provider will fail, but how well you’re prepared when they do.

![[AWS Architecture #1] Designing a Budget 3-Tier Architecture](/blogimages/3-tier.png)